How vCon and JLINC Enable Conversational and Agentic Commerce

For the better part of a year, we in the JLINC team have been actively engaged in supporting Strolid and now its spin-off,Vconic, in building and deploying the vCon draft standard. vCon is used for capturing, storing, exchanging, and analysing conversational data in a unified, audit-able, privacy-aware way. The JLINC aspect of that is to underpin data provenance recording and protect the integrity of the conversation record.

So why is a standardised means to record conversations important and useful? To my mind, based on more years’ experience in CRM than I care to recall, this would address what has long been the weak point in the CRM in practice.

As vCon is, at its core, a standardised data format let’s look at where records of conversations fit into the classic CRM data model (i.e. the proverbial single/ complete view of the customer).

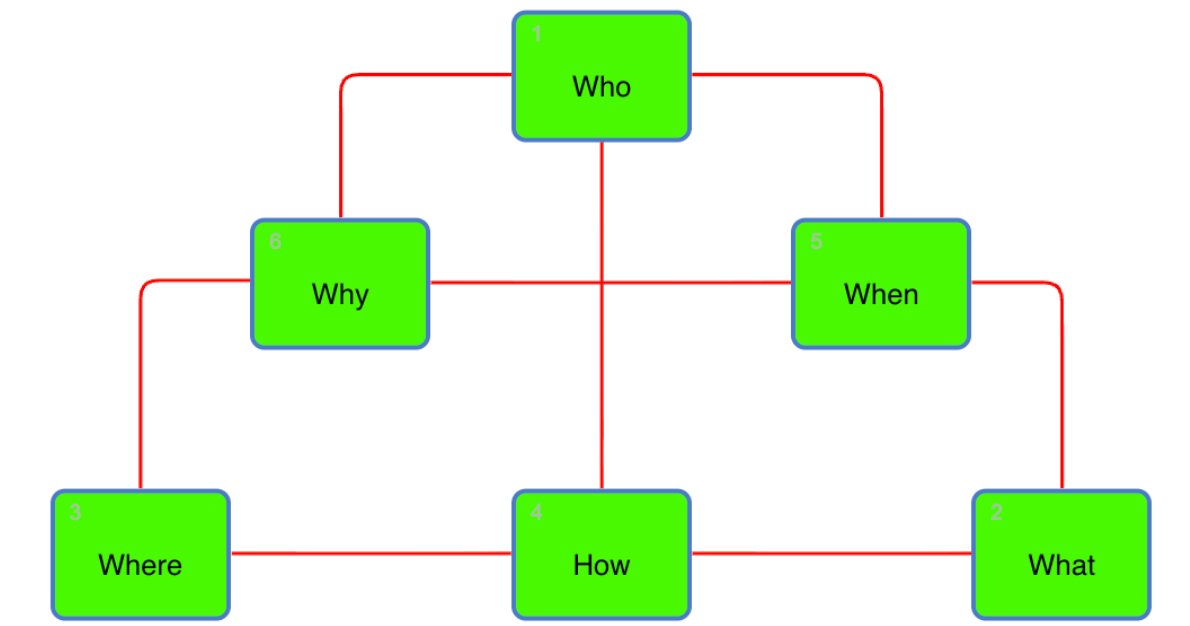

The visual below shows the logic that underpins all customer management systems – either overtly or tacitly. Organisations wish to understand the following, and then take actions based on that knowledge to enable them to meet their objectives.

They wish to have robust, actionable, compliant records of:

WHO is the Customer, or Prospect (or citizen, volunteer, patient etc.)?

WHAT is the Product (or Service) they are engaging or could engage with?

WHERE is this engagement happening, i.e. Outlet or Distribution Channel?

WHEN are the key dates and times of historic or future activities

HOW is the product/ service being presented to the customer?

WHY was a purchase was made (or indeed not made)?

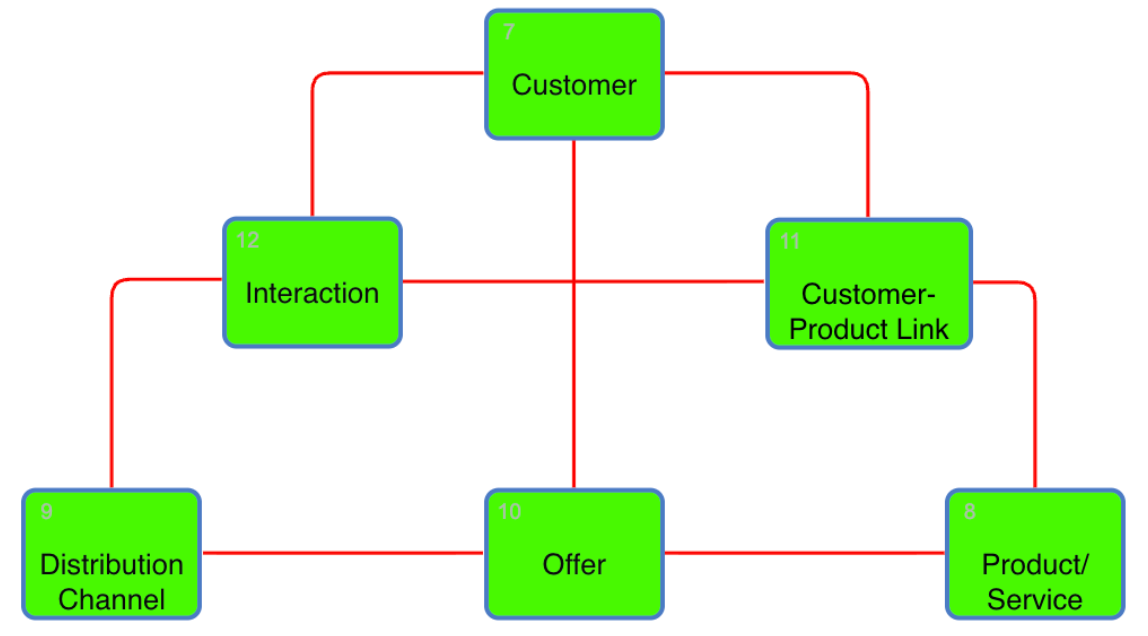

This then translates to more technical names under the hood at the database level as shown below.

The problem is…that the who, what, where, when and how are fact based….; if an organisation gathers that data well then those questions are all readily answerable.

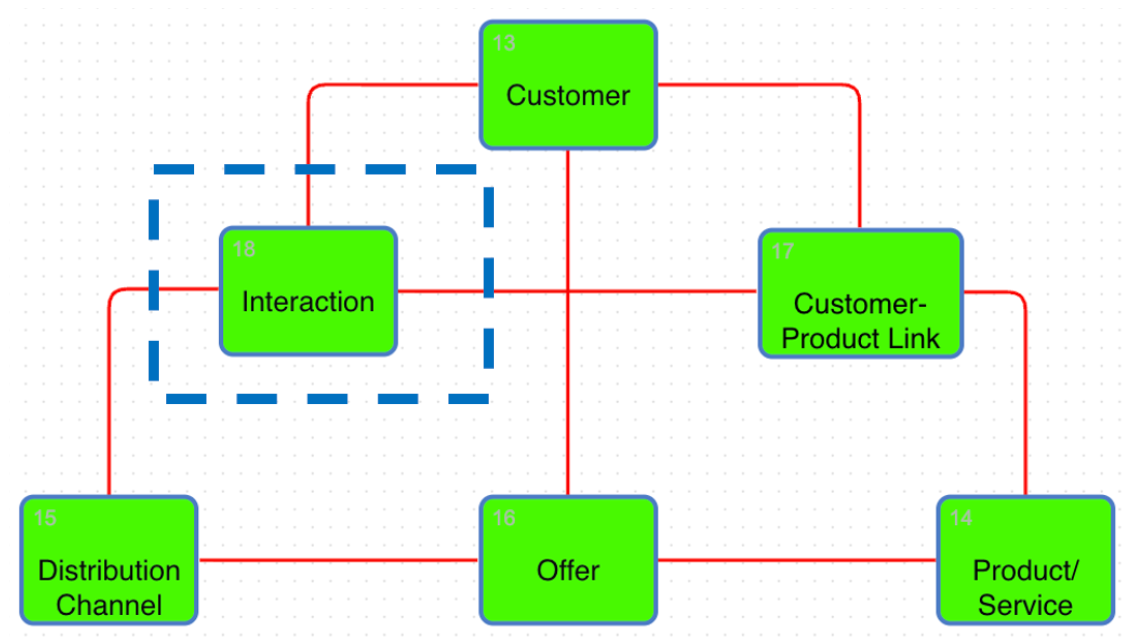

But understanding and using the WHY data has historically been very difficult – quite simply because there has not, until now, been a standardised means to gather data from what is almost always based on conversations. Each organisation has typically come up with their own best endeavours. That’s why we hear so often ‘this call is being recorded in order to train our teams’ (and increasingly train our AI’s). It is typically possible to have robust management and use of that interaction/ conversation record within a single system, e.g the CRM system, or the e-commerce shop, or marketing communications, or customer service case management. But it is incredibly difficult to build a consolidated view of customer interactions and conversations across systems. And of course customers can have things to say across each and every channel.

Step forward vCon….

VCon (Virtual Conversation) is a new open standard, or more precisely, a proposed file format and protocol under discussion at the Internet Engineering Task Force (IETF). The work is known for it’s ability to capture, store, exchange, and analyse conversational data in a unified, audit-able, privacy-aware way.

It contains four parts: participants, recordings, analyses, and attachments. Participants are those involved in the conversation, while recordings are the raw media, comprising voice, video, messaging, email, etc., with privacy/ GDPR protections as well as compliance with the 18 States in USA with similar regulatory legislation . Analyses are derived outputs, such as transcripts, sentiment, intent, or predictions (such as likelihood to recommend), with full traceability of how the analysis was done. Lastly, attachments (metadata and provenance) comprise timestamps, channels, and whether a conversation is authentic or a deep fake, etc.

What that then enables is incredibly important…; it enables much more robust approaches to answering that ‘why did this happen?’ question, at massive scale, with the ability to learn from those outputs, and factor into AI model building and deployment.

Let’s consider some classic CRM and e-commerce use cases that illustrate this:

- Why did someone respond to that particular advert? Which creative treatment, placement or call to action worked, and why?

- Why did that lead convert? And why did this other one not convert?

- Why did the customer buy that product/ service variant in particular in isolation, and then also in comparison with these others from our portfolio; or these competitors?

- Why do so many people drop out or pause at a particular part of a customer journey.

- Why did the customer fund the purchase that way, versus other options?

- Why did the customer return an item?

- Why did that customer service case emerge?

- Why did this customer case resolve quickly and this one did not?

- Why did this case escalate and become a complaint?

All of those can be addressed with the vCon standard method realising the opportunity for significant revenue gains, cost reductions and organisational learning. And that learning can enable and shape those AI deployments to underpin good practices and minimise those that have shown negative consequences.

The JLINC aspect of this is to underpin the data provenance both within and across organisational systems. JLINC follows the data within the vCon path, to assure its credibility and integrity. If human or machine error has occurred, JLINC prevents that from being a part of the end result.This is a critical piece of the vCon capability, knowing that the vCon data and resulting outputs can be traced back to the raw data and communication channels - whether voice, email, web, chatbot and now AI agents have been part of the conversation.

With vCon, conversational data can now be brought into the organisational data assets. Conversational commerce can move to a new level, and Agentic Commerce can fly in the knowledge that what the agent(s) have said and done have been recorded and this is available for downstream learning and audit.

To discuss our vCon and JLINC capabilities, get in touch via our web form.